My clients for price-setting and price analysis are:

- MTS Systems (testing equipment manufacturer)

- Target (Roundel digital advertising division)

Given the sensitivity of that work, I can only provide on this page a discussion of the technical aspects of two of my projects, a redacted work sample for one, and no discussion of another. I would leave it to my references to decide what they would wish to share.

MTS Systems

Eden Prairie, MN

Pricing tool for thousands of after-market products

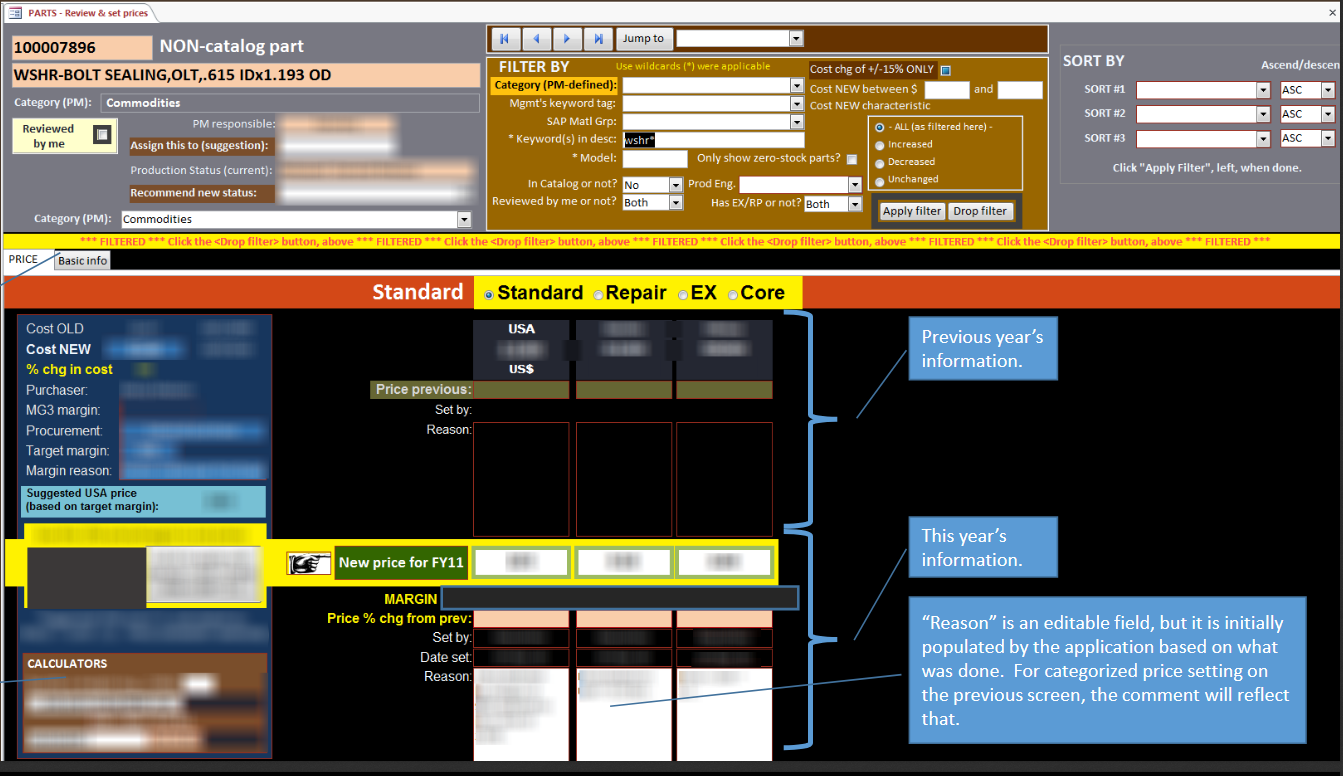

Screenshot of the PACRT application. See a short slideshow of this user interface.

MTS Systems is one of the world's largest manufacturers of test equipment. This is equipment that shakes, twists, bends, squeezes, stretches, and spins things for quality testing. They also sell replacement parts and other maintenance products for its equipment. These last two types of offerings are called "after-market products."

MTS Systems has about 6,000 such after-market products, ranging from screws to large assemblies used in earthquake simulators to test building codes. A staff of six product managers had to set prices for these after-market products every year and within a small window of time. Ideally, price decisions would be based on the latest cost, customer demand, price of alternatives, volume packaging, and so on. In reality, there was not enough time for a product manager to make informed decisions on all of the almost 1,000 parts for which they were responsible. This is why I was asked to find a solution.

My solution was a distributed application I called "PACRT": Price And Cost Review Tool (pronounced “pack-rat”). It consists of a SQL Server datamart produced from SAP/ERP data and a user interface built with MS Access and VBA.

The primary features of PACRT are:

- Users create custom product categories and assign products to them.

- Users can view cost, price, and other information by category or individual product.

- Users can set prices for an entire category using a formula (such as target margin). They can still set or change a price for an individual product at any time.

- A quality assurance screen lets users identify problems: they select a problem type from a menu, then see which of their prices have that problem.

With filters on the back-end, each manager sees information only on their products and no one else’s. A management version of the app lets the supervisor see progress reports and review any product manager’s work using the same quality assurance tools the product managers have.

With PACRT, a product manager can now make informed pricing decisions on ~1,000 products in a few days. PACRT was so successful that I was asked to create similar applications for the pricing of software and of services, which I did.

Work sample: Slideshow of PACRT user interface.

Automated price-setting for tens thousands of products daily

This case study will focus on my business analyst role in this project for a major retailer where I was also the developer and data hygienist.

Retailer was losing access to a third-party product pricing tool unexpectedly. They had invested a large amount of time in defining and refining pricing algorithms and other strategy components in this tool. They therefore wanted a new pricing system that could leverage similar functionality. Retailer was also dissatisfied with many features of the 3rd-party tool. They therefore did not want a simple replacement of the old system, but something new and better.

The immediate need was to have enough functionality in place to avoid disruption of business when the 3rd-party tool went away. Once that was met, Retailer wanted to build new functionality into the system. Although Retailer envisioned eventually having a browser-based tool as their interface for the system I would create, they understood that an MS Access front-end was the fastest way to get the application they needed. Access would also simplify adding enhancements. The Access user interfaces I would create would serve as working prototypes for the eventual browser-based interfaces. The databases and stored procedures I would create would persist regardless of the user interface used.

PART 1: What could go wrong?

After gathering requirements and composing my use cases, I identified three critical questions whose answers would determine the project’s success or failure. They were:

1) Were Retailer’s existing pricing algorithms useable in Teradata (the database management system I would have to use)?

2) Could I write Teradata stored procedures that could produce what was needed within the nightly time window?

3) Could I create those stored procedures — tested, debugged, and ready for production — before the 3rd party tool went away?

Following my principle of failing quickly, I dealt with those critical areas first:

I was able to quickly verify that the Retailer’s algorithms would work in Teradata, although some data hygiene would be needed. This meant the project did not need to divert time and effort to recreate all the algorithms. Determining this quickly meant more time was available to build and test more functionality in the first release of the system.

Before I began outlining my new Teradata database and stored procedures, I worked with the client to create a mock-up of the reviewer’s app I would later need to create. Getting approval on this mock-up now rather than later determined what ancillary information my stored procedures and tables would need to capture.

I then created an MS Access prototype to develop and test the concept I had in mind for the Teradata tables and stored procedures. This prototyping confirmed my concept and refined its details. I had only to translate my MS Access table structures and VBA programming into Teradata.

With a tentative plan created, I met with a Teradata database administrator (DBA) to learn about the process that prepared the input data my own process would use. I learned that it would take hours to compile all the inputs my stored procedures would need. This pre-processing time reduced the time window for my own process. I suggested and the DBA agreed I should have my own pre-processing scripts that would identify all possible components for that night’s pricing process. When input data became available to my system, my scripts would only need to filter by the factors in that input data to arrive at the set of components needed for that night’s processing. I therefore traded efficiency for the certainty of on-time completion.

To create working code as quickly as possible, I needed to quickly verify that the inputs and outputs of each step in my automated process were correct. To support this, I outlined my Teradata scripts to produce temporary tables for each step. Similarly, I divided the automated process into separate stored procedures comprised of a small set of related tasks. I could therefore validate one large part of the automated process before starting to write the next. This modularization saved me from the time-consuming process of debugging a single large, complex stored procedure.

With all the critical areas addressed successfully and with key lessons learned, I could begin to organize my requirements backlog for each of my sprints. Although I generally completed one component of my system — database, scripts, reviewer interface, and administrator interface — before proceeding to the next, I did have one major digression. While testing my scripts, I found they were failing due to syntax problems in some algorithms. These were problems my earlier quality checks had missed. I therefore created a syntax testing tool, intended for the administrator interface, far ahead of my original schedule. This helped repair the algorithms and so got my scripts into user acceptance testing sooner.

As planned, I later integrated this testing tool into the administrator interface. It enables new and revised algorithms to be tested for syntax and tentative results before they go into production.

PART 2: Enhancements are simple thanks to good code structure

After the first release of the reviewer interface, I talked with price reviewers about their experience. They expressed their frustration with not being able to quickly make decisions on batches of prices that fit certain criteria. This functionality had not existed in the previous 3rd-party system nor had price reviewers mentioned it when I gathered requirements, but they considered it a need now. I therefore suggested to the client that I create this for the next major release of the app. They agreed.

With that next major release, reviewers can now filter products by up to five criteria and make a pricing decision on that filtered set. The five filtering criteria could be selected from dozens of fields and include user-specified ranges and comparisons, such as “>=” and “BETWEEN.”

Creating this new feature was greatly simplified by how I had structured my programming in the user interface: I had organized my computer code into separate stored procedures based on the action performed --- in effect, creating building blocks of code. Therefore, to create the new filter-and-decide feature, I only had to create a few new building blocks of code, then add just one command in the existing code to bring those new blocks together. By only having to add one small change to one existing block of code, I only needed to test that one block and the new ones I created. I did not need to retest the entire application (a very lengthy process). My "building block" approach ensures future enhancements can be rolled out just as easily.

As business analyst on this Retailer project, I addressed critical areas first, prototyped the solution, and modularized my automated processes into "building blocks". As the sole developer and Quality manager on the project, I used my programming and data hygiene skills to deliver a working pricing system in time to avoid business disruption. My subsequent efforts in continuous improvement led to final versions of the user interfaces which, the client has told me, exceeded their expectations.

Copyright Will Beauchemin 2024